The race toward Artificial Intelligence has shifted from a competitive advantage to a survival imperative in highly regulated industries. However, while CTOs and quality leaders envision a future of automated predictions and autonomous operations, a silent yet critical obstacle threatens these investments: data quality for AI.

In markets where an error is not merely a technical failure but a compliance and regulatory risk, AI is only as reliable as the data that feeds it. Deploying advanced algorithms on an inconsistent data foundation is the fastest way to turn innovation into operational risk.

Read on to understand why data quality is a non-negotiable prerequisite for any successful AI initiative and how to structure AI governance to ensure integrity and compliance.

What is data quality?

In the corporate environment, data quality goes far beyond the idea of “clean data.” It also encompasses the concept of fitness for purpose. In other words, is the data accurate, complete, and reliable enough to support critical decision-making and audits?

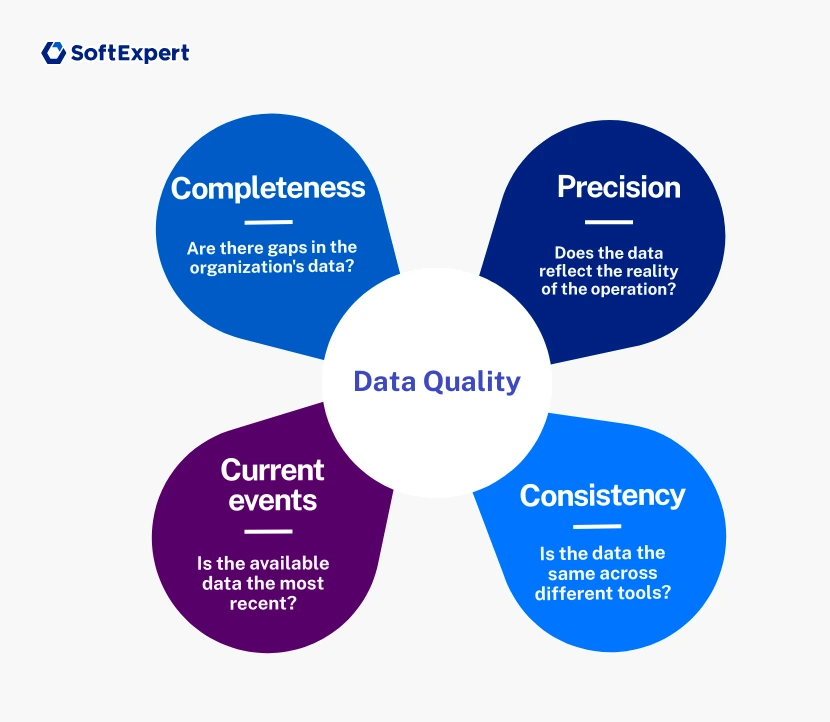

For compliance and quality leaders, data quality must be assessed across key pillars, often referenced in frameworks such as DAMA-DMBOK:

- Accuracy: Does the data reflect reality on the shop floor or in the laboratory?

- Completeness: Are there gaps or null values in mandatory fields of safety or compliance forms?

- Consistency: Is the product code the same across the ERP, the quality management system (QMS), the risk management platform, and other tools?

- Timeliness: Is the information available to AI the most up-to-date version, or is it already outdated?

Ignoring these pillars comes at a high cost. According to Gartner, poor data quality can cost organizations an average of USD 12.9 million per year. In addition, studies from MIT Sloan Management Review indicate that the cost of bad data can represent between 15% and 25% of a company’s revenue, an unacceptable waste in markets with tight profit margins.

What is the relationship between data quality and AI?

Artificial Intelligence, especially Machine Learning, does not “know” anything on its own. It identifies statistical patterns across large volumes of information. As a result, this relationship is one of direct dependency: data is the input that fuels the algorithm.

For this reason, if the historical database of nonconformities used to train a predictive model contains classification errors or ambiguous descriptions, the AI will learn those errors. This phenomenon is known as GIGO, Garbage In, Garbage Out. When poor-quality information is used, the technology produces poor-quality outcomes.

In the current context of Generative AI and large language models, the primary risk of low data quality is hallucination. When fed with fragmented or contradictory corporate data, an LLM may generate audit reports that appear plausible but are factually incorrect.

In other words, the Artificial Intelligence fabricates information, hence the term “hallucination”, and presents it as if it were real. This exposes organizations to severe risks with regulatory bodies such as ANVISA, the FDA, or ISO, and can seriously impact business operations.

Why does AI not work without data quality?

A lack of data integrity creates failures that are often only detected after the damage has already occurred. Some of the most common issues that arise when AI is used without proper data quality include:

- Algorithmic and operational bias: Unbalanced data can bias Artificial Intelligence. For example, if a production line fails to record micro-stoppages in the system, a predictive maintenance AI will assume the machine operates flawlessly and will fail to anticipate an actual breakdown.

- Loss of trust: The adoption of new technologies depends on user confidence. If an AI recommends a corrective action plan (CAPA) based on poor-quality data and the manager identifies the error, trust in the tool is compromised, effectively rendering the technology investment useless.

- Data team inefficiency: IBM reports that data scientists spend approximately 80% of their time preparing and cleaning data, leaving only 20% for actual analysis and modeling. Without data quality at the source, highly skilled professionals become little more than “data custodians.”

The negative impacts of poor data quality on AI

Poor data quality has far-reaching consequences across critical processes. The absence of data quality, especially in Artificial Intelligence initiatives, can affect an organization’s operations in several ways, including:

- CAPA management: An AI analyzing root causes based on poorly categorized incident data will lead to ineffective action plans, resulting in the recurrence of the root cause.

- Controlled documents: Incorrect metadata makes information retrieval more difficult and undermines traceability. In these cases, a search AI that delivers an obsolete document to an operator can cause workplace accidents or quality deviations.

- Internal audits: Algorithms that perform pre-audits rely on accurate logs. Deficiencies in log quality can create a false sense of security, leading to unpleasant surprises during an official external audit.

How to build governance structures to ensure data quality

To mitigate these risks, data quality must move beyond being an IT-only responsibility and become a core business strategy. Putting this approach into practice requires the following steps:

- Define data stewards: Appoint accountable owners in each department, such as Quality, Engineering, and HR, who are responsible for data integrity.

- Eliminate data silos: Fragmentation is the enemy of data quality for AI. Use integrated platforms that centralize documents, processes, and risks. With SoftExpert Suite, for example, you can unify quality and compliance management to avoid data duplication, which is a major source of inconsistency.

- Establish data entry policies: Standardization begins at data collection. Create input masks and validation rules in forms to prevent human error from contaminating the database.

The importance of continuous monitoring for AI data quality

Data is subject to entropy, meaning it tends to degrade over time as processes change and systems are updated. For this reason, continuous data monitoring is essential. It enables the implementation of automated quality rules and early issue detection.

As part of continuous improvement initiatives, implement data health dashboards and automated alerts that notify managers of anomalies in real time. Monitor metrics such as sudden increases in incomplete fields or values outside acceptable standard deviation ranges.

To streamline data quality tracking and updates, leverage data observability tools. They are essential to ensure that AI continues to consume reliable information throughout its lifecycle and to prevent hallucinations.

Traceability and version control: the key to compliant AI data quality

In regulated industries, knowing what Artificial Intelligence decided is not enough. Organizations must also understand why the decision was made and based on which data. Traceability and data lineage make it possible to answer critical auditor questions, such as: “Which version of the standard operating procedure (SOP) did the AI use to recommend this action?”.

However, implementing mechanisms that ensure end-to-end traceability is a significant challenge. To avoid this risk, it is essential to rely on robust management systems.

SoftExpert Document, for example, provides strict document version control and a complete audit trail. This ensures that even years later, the organization can retrieve the exact data snapshot used at the time of the decision, supporting defensibility in audits or legal proceedings.

Conclusion

Artificial Intelligence is a force multiplier. When applied to high-quality processes and data, it scales excellence. When applied to poor data, it amplifies error and risk.

For organizations operating in regulated markets, data quality is not a technical detail. It is the foundation of compliance and sustainable innovation. Before investing in complex algorithms, make sure your data foundation is solid and reliable.

Looking for more efficiency and compliance in your operations? Our experts can help identify the best strategies for your company with SoftExpert solutions. Contact us today!